On the Radical Anti-Institutionalism of Internet Intellectuals

I recently attended a talk by Ethan Zuckerman, the director of the MIT Center for Civic Media advertised as addressing the question “is digital media changing what it means to be an engaged citizen?” As a blogger, founder of three hyperlocal news websites, and now student of technology in (government-led) urban planning, I was interested in what he would have to say. The talk, which lasted at least 45 minutes, was ostensibly on this, but I found it deeply troubling. Zuckerman, officially a “research scientist” at MIT, talked about government, media, technology, and society with little mention of any previous research. I waited –- in vain –- to hear even the barest mention of previous thinkers on these topics (for example: the Federalist Papers, de Tocqueville, Dewey, Lippman, Schumpeter, Arnstein, Pateman, Castells, Fung, work in communications and political science journals, etc.). (It looks like what I heard was very similar to his keynote at a recent conference, see notes and a video of the talk.)

I have no doubt he is familiar with some of these thinkers, and probably has something to say about them. But they were largely omitted from the talk aside from perfunctory mention of several recent popular books. This presents a puzzle: why? Is it possible to conduct research on a topic by ignoring previous research? If so, why would you want to do it?

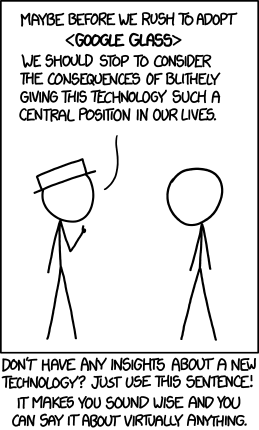

In hindsight, I should have not been surprised. The talk was an example of a broader milieu of “Internet intellectuals” who eschew previous research and thinking, or treat it in fragmentary or limited ways. I am sympathetic to the arguments put forward by Evgeny Morozov, who has gleefully attacked the most notoriously vapid talking heads (notably Tim O’Reilly and Jeff Jarvis), but I lack his indignation. The standards for public debate have always been fast and loose, and it’s probably good that way. In the long sweep of history, the junk is forgotten and gems of insight are remembered. However, academia is different. There is a norm of at least acknowledging other points of view, and attempting to take them seriously. Certainly, deep cleavages remain between fields, but they are for the most part about differing basic assumptions about reality or how to conduct research, not a willful ignorance of the alternatives. Good scholars read broadly and continually question their own basic assumptions.

In light of this, I concluded Zuckerman was choosing to omit previous research from this talk because he believes, at some level, that it is not required for the discussion. Perhaps the Internet has rendered everything totally new. We need new ways of thinking, and therefore the old ideas are not only a hindrance but must be deliberately exorcised. There is something to this point of view. Existing theories and worldviews are powerful blinders, and good empirical research often involves looking closely at reality before considering how to interpret it. This is required to make new insights, whether tweaks to earlier theories or totally novel ideas. However, research cannot be done in a total vacuum. All new knowledge is related, in some way, to previous thinking. Even boldly innovative work should acknowledge the flawed models it hopes to supersede.

Working on my dissertation, at the urging of my dissertation committee member Annette Kim, I explored some of the literature on institutions in society. Scholars in multiple fields began to realize in the late 80s that institutions play a key role in understanding the economy, government, and other social phenomenon. Economists focused on analyzing why institutions might be efficient for “rational actors,” but sociologists pointed out that institutions consist not only formal rules and structures, but broader cultural norms and ways of thinking. For example, companies follow lots of standard practices not because they objectively know they are efficient, but because they are influenced by broader cultural assumptions about how things should be done. Sociologists also argue that organizations and companies have missions broader than simply making a profit. In some deep way, institutions actually organize our behavior and create structures of meaning.

I realized the Internet-centric worldview is radically oriented towards deinstitutionalization. In short, since institutions are “socially constructed” (meaning they exist partly because we believe in them), if you get enough people to believe they don’t exist they actually won’t. Now, this may be useful if the institution you are attacking has no value or is harmful, but I’m not sure this is always the case. I don’t fantasize about the possibility of some type of benevolent anarchist utopia. Not only because my political opinions differ, but also because I think institutions perform valuable functions for us.

An example will help illustrate this. In a classic study of an impoverished town in Southern Italy in the 1950s, political scientist Edward Banfield (whose later work I would criticize) tried to figure out why it lacked a functional government and anemic economy. He concluded it was because of a local culture that made it difficult, if not impossible, to create institutions of any type. In short, institutions are important because they do stuff. Without them, modern life would be impossible.

Luckily, for the most part the deinstitutional thinking of “Internet intellectuals” has run up into the hard brick wall of reality. A key example is copyright laws, where despite the valiant efforts of activists it remains as deeply entrenched as ever, although there have been great strides made in voluntary copyright liberalization. Activists like Aaron Schwartz are subjected to (in my view, expectedly although not appropriately harsh) unrelenting state power. Moral arguments aside, good activism must be able to diagnose the nature of the enemy. In this case, copyright laws are held up by powerful organized interests such as corporations that are deeply invested in their maintenance. I don’t like or condone what is happening, but I understand it.

Another example of this tendency is in online education. To a certain degree, universities define what higher education should be. There is the idea that students select a major or field of study, which has a set curriculum, and then students pick specific topics and use accepted research methods to build on or challenge the existing knowledge. The idea of liberal arts education is that education isn’t merely for career purposes, but to broaden and deepen the human experience, attuning students to arts, ethics, and other dimensions of culture.

Needless to say this is in sharp contrast to many popular online education models. Curriculums are largely nonexistent, and the focus is on chopping up complex ideas into discrete snippets presented through short videos or exercises. The more nuanced practitioners are aware of these dimensions, but the loudest proponents of new online education models, inevitably from engineering or computer science, are throwing out the babies (curriculum, liberal arts, in-person seminars, the social experience of attending college, etc) with the bathwater, because they never valued the baby very much to begin with. For them, education is a set of discrete, narrow skills that are valued in industry. Full stop. The rest is for your spare time.

I am not a luddite opposed to technological innovation. However, institutional reform should be done carefully, not unthinkingly. We accept “disruption” in the private sector, but always under the watchful eye of regulations. But private businesses merely (for the most part) make stuff. Government and educational institutions play a much central role in our society, and we may be blind to their deep and nuanced functions. They are not all good or bad, and certainly should not be above thoughtful reform. In fact, I wrote previously that technology-centered efforts to reform city government are significant specifically because they are at least interested in the important issue of government reform.

I don’t think most of this is very original or profound, and the naive anarchism of Internet-centric people is legendary. However, what is surprising to me is the extent to which is has penetrated MIT, an elite educational institution. In fact, the entire MIT Media Lab, by design independent from formal disciplines, has been engaging more and more in social questions with limited links to existing scholarship and theories in the areas they are moving into.

Oddball centers exist throughout academia if you look hard enough, and maybe some of them are actually onto something the rest of us are missing. The point though, is universities share a spirit of inquiry and examination. All knowledge should be provisional and open to scrutiny. Therefore this is a direct address to the MIT Center for Civic Media. What have I got wrong? How do you engage in previous research, and if you ignore it, why? Not everyone shares your assumption that everything is totally new. I’s a too-rare academic willing to entertain the idea that some things may be, and new models are needed. Let’s meet in the middle and see where it leads.

(This post was originally written for LinkedIn. Go to the LinkedIn version to engage in the conversation.)

I’ve been scratching my head trying to think about how to understand the different facets of labor that are shaping contemporary life. I don’t have good answers; I only have some provocations and a few questions, but I would love to hear your thoughts.

I’ve been scratching my head trying to think about how to understand the different facets of labor that are shaping contemporary life. I don’t have good answers; I only have some provocations and a few questions, but I would love to hear your thoughts.

As a teenager, I was a sandwich artist. I’d arrive at work, don my uniform and clock in. I had a long list of responsibilities – chopping onions, cleaning the shop, preparing the food, etc. Everything was formulaic. I can still recite how to ask a customer if they want onions, pickles, lettuce, green peppers, or black olives. The job paid minimum wage and was defined by doing pre-specified tasks in an efficient and predictable manner with a smile. When my compatriots got fired, it was almost always for being late. In-between making sandwiches and doing the rote tasks, we would gossip and chat, complain about regulars and talk about run-ins with cops (who demanded free food which meant a dock in pay for whoever was working). And when my shift was over, I’d clock out and leave, forgetting about Subway even though the scent lingered and filled my car.

Today, I have my dream job. I’m a researcher who gets to follow my passions, investigate things that make me curious. I manage my own schedule and task list. Some days, I wake up and just read for hours. I write blog posts and books, travel, meet people, and give talks. I ask people about their lives and observe their practices. I think for a living. And I’m paid ridiculously well to be thoughtful, creative, and provocative. I am doing something related to my profession 80-100 hours per week, but I love 80% of those hours. I can schedule doctor’s appointments midday, but I also wake up in the middle of the night with ideas and end up writing while normal people sleep. Every aspect of my life blurs. I can never tell whether or not a dinner counts as “work” or “play” when the conversation moves between analyzing the gender performance of Game of Thrones and discussing the technical model of Hadoop. And since I spend most of my days in front of my computer or on my phone, it’s often hard to distinguish between labor and procrastination. I can delude myself into believing that keeping up with the New York Times has professional consequences but even I cannot justify my determination to conquer Betaworks’ new Dots game (shouldn’t testing new apps count for something??). Of course, who can tell if my furrowed brow and intense focus on my device is work-focused or not. Heck, I can’t tell half the time.

In the digital world, the line between what is fun and what is work is often complicated. There are people whose job it is to produce tweets and updates as a professional act, but they sit beside people in a digital environment who produce this content because it’s connected to how they’re socializing with their friends. Socializing, networking, and advertising are often intertwined in social media, making it hard to distinguish between professional and personal, paid labor and career advancement.

There are are people who understand that they’re “on the job” because of where they are physically, but there are also people whose model of work is more connected to their interaction with their Blackberries or the kinds of actions that they’re taking. And then there are people like me who have lost all sense of where the boundaries lie.

There is tremendous anxiety among white collar workers about how blurry the boundaries have gotten, but little consideration for how that blurriness is itself a mark of privilege. More often than not, those with more social status have blurrier boundaries around space, place, and time. Sometimes, this privilege comes with a higher paycheck, but freelance writers have a level of class privilege that is not afforded to the punch-in, punch-out workforce even if their actual income is paltry.

Often, there’s rampant financial and status inequality between those whose careers are defined by blurred boundaries and those who work in a prescribed manner. Many C-level execs justify their exorbitant salaries through the logic of risks and burdens without accounting for the freedoms and flexibility associated with this kind of work or recognizing the physical, psychological, and cultural costs that come with manual, service, or rote labor in prescribed environments. The freedom to control one’s own schedule has value in and of itself. Yet, not everyone with economic resources feels as though they are in control of their lives. And it’s often easier to blame the technology that tethers them than work out the dynamics of agency that are at work.

What’s at stake isn’t just that work is invading people’s personal lives or that certain types of labor are undervalued. It’s also that the notion of fun or social is increasingly narrated through the frame of work and productivity, advancement and professional investment.

Labor is often understood to be any action that increases market capitalization. But then how do we understand the practice of networking that is assumed as key to many white collar jobs? And what happens when, as is often the case in the digital world, play has capital value?

In academic circles, debates are raging over the notion of “free labor.” Much of what people contribute to social media sites is monetized by corporations. People don’t get paid for their data and, more often than not, their data is used by corporations to target them – or people like them – to produce advertising revenue for the company. The high profitability of major tech companies has prompted outrage among critics who feel as though the money is being made off of the backs of individual’s labor. Yet most of these people don’t see their activities as labor. They’re hanging out with friends or, even if they’re being professional, they’re networking. Accounting for every action and interaction as labor or work doesn’t just put a burden on social engagements; it brings the logic of work into the personal sphere.

Most of these dynamics predate the internet, but digital technologies are magnifying their salience. People keep returning to the mantra of “work-life balance” as a model for thinking about their lives, even as it’s hard to distinguish between what constitutes work and what constitutes life, which is presumably non-work. But this binary makes little sense for many people. And it raises a serious question: what does labor mean in a digital ecosystem where sociality is monetized and personal and professional identities are blurred?

As you think about your own professional practices, how do you define what constitutes work? How do you think labor should be understood in a networked world? And what does fairness in compensation look like when the notion of clocking in and clocking out are passe?

(This post was originally written for LinkedIn. Go to the LinkedIn version to engage in the conversation.)

facebook

facebook  reddit

reddit